ELK

ELK是Elasticsearch、Logstash、Kibana的简称,这三者是核心套件,但并非全部。

Elasticsearch是实时全文搜索和分析引擎,提供搜集、分析、存储数据三大功能;是一套开放REST和JAVA API等结构提供高效搜索功能,可扩展的分布式系统。它构建于Apache Lucene搜索引擎库之上。

Logstash是一个用来搜集、分析、过滤日志的工具。它支持几乎任何类型的日志,包括系统日志、错误日志和自定义应用程序日志。它可以从许多来源接收日志,这些来源包括 syslog、消息传递(例如 RabbitMQ)和JMX,它能够以多种方式输出数据,包括电子邮件、websockets和Elasticsearch。

Kibana是一个基于Web的图形界面,用于搜索、分析和可视化存储在 Elasticsearch指标中的日志数据。它利用Elasticsearch的REST接口来检索数据,不仅允许用户创建自己的数据的定制仪表板视图,还允许用户以特殊的方式查询和过滤数据。

原理介绍

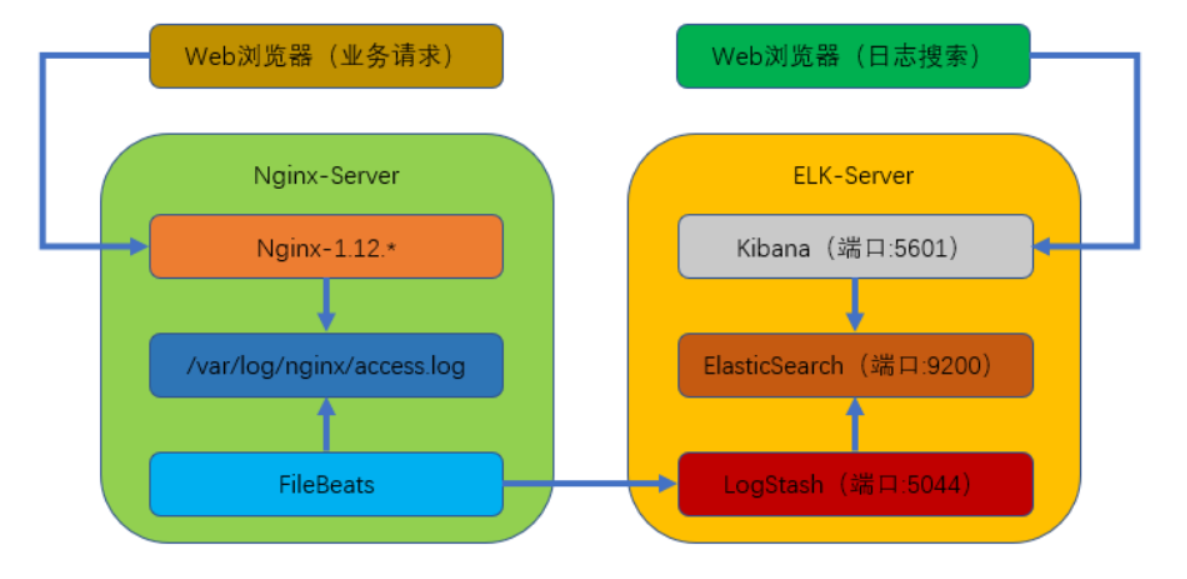

本次部署的是FileBeat(客户端),ElasticSearch + FileBeat + Kibana(服务端)组成的架构

业务请求到达Nginx客户端机器上的Nginx,Nginx响应请求,并在access.log文件中增加访问记录;FileBeat搜集新增的日志,通过LogStash的5044端口上传日志;LogStash将日志信息通过本机的9200端口传入到ElasticSearch;搜集日志的用户通过浏览器访问Kibana,服务器端口是5601;Kibana通过9200端口访问ElasticSearch;

|

实验准备:nginx server 192.168.182.99 <filebeat> ES server 192.168.182.11 <elasticsearch+kibana>

PS:elaticsearch 海量数据分析需要巨大的内存支持,至少2G

# hostnamectl set-hostname myat-elk

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

JDK部署

# rz

# tar -xvf /tmp/elk/jdk-8u201-linux-x64.tar.gz -C /usr/local/

# cd /usr/local

# mv jdk1.8.0_201/ jdk1.8

配置环境变量

# vi /etc/profile

JAVA_HOME=/usr/local/jdk1.8

JRE_HOME=$JAVA_HOME/jre

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export JAVA_HOME JRE_HOME PATH CLASSPATH

# source /etc/profile

安装 elasticsearch

# tar -xvf elasticsearch-6.2.3.tar.gz -C /usr/local/

[root@elk ~]# cd /usr/local/elasticsearch-6.2.3/

[root@elk elasticsearch-6.2.3]# ls

bin config lib LICENSE.txt logs modules NOTICE.txt plugins README.textile

配置elasticsearch配置文件 elasticsearch.yml

[root@elk ~]# grep "^[a-z]" /usr/local/elasticsearch-6.2.3/config/elasticsearch.yml

node.name: elk

network.host: 192.168.110.200,127.0.0.1

http.port: 9200

elasticsearch默认不能用root用户启动,因此创建一个普通用户elk用于启动elasticsearch

# useradd elk

# echo "123456" | passwd --stdin elk

# chown -R elk:elk /usr/local/elasticsearch-6.2.3/

# su - elk

启动elasticsearch

[elk@elk ~]$ cd /usr/local/elasticsearch-6.2.3/bin/

[elk@elk elasticsearch-6.2.3]$ ./bin/elasticsearch -d

启动过程 或 报错可以查看日志

[elk@elk elasticsearch-6.2.3]$ cat logs/elasticsearch.log

[2021-07-04T20:48:07,491][ERROR][o.e.b.Bootstrap ] [elk] node validation exception

[2] bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

[2]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

解决方案:修改系统限制值,重登陆生效

root@elk ~]# tail -10 /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 4096

* hard nproc 4096

root soft nproc unlimited

root hard nproc unlimited

修改系统控制文件,修改允许elasticsearch开辟655300字节以上的内存空间

[root@elk ~]# vi /etc/sysctl.conf

vm.max_map_count=655300

[root@elk ~]# sysctl -p ----执行立即生效

vm.max_map_count = 655300

再次重启elasticsearch

[elk@elk ~]$ cd /usr/local/elasticsearch-6.2.3/bin/

[elk@elk elasticsearch-6.2.3]$ ./bin/elasticsearch -d

[elk@elk elasticsearch-6.2.3]$ cat logs/elasticsearch.log

[elk@elk elasticsearch-6.2.3]$ tail logs/elasticsearch.log

[2021-07-04T21:00:35,186][INFO ][o.e.h.n.Netty4HttpServerTransport] [elk] publish_address {192.168.110.200:9200}, bound_addresses {192.168.110.200:9200}, {127.0.0.1:9200}

[2021-07-04T21:00:35,186][INFO ][o.e.n.Node ] [elk] started

[elk@elk elasticsearch-6.2.3]$ netstat -nlpt

tcp6 0 0 127.0.0.1:9200 :::* LISTEN 12079/java

tcp6 0 0 192.168.110.200:9200 :::* LISTEN 12079/java

tcp6 0 0 127.0.0.1:9300 :::* LISTEN 12079/java

tcp6 0 0 192.168.110.200:9300 :::* LISTEN 12079/java

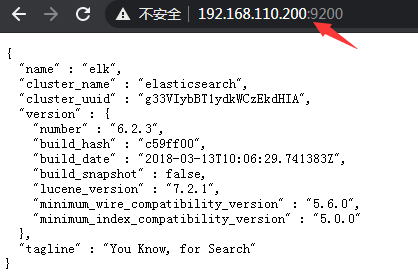

测试查看

|

++++++++++++++++++++++++++++++++++++++++++++++++++

安装配置Kibana

[root@elk ~]# tar -xf /tmp/elk/kibana-6.2.3-linux-x86_64.tar.gz -C /usr/local/

[root@elk ~]# cd /usr/local/kibana-6.2.3-linux-x86_64/

[root@elk kibana-6.2.3-linux-x86_64]# ls

bin data node NOTICE.txt package.json README.txt ui_framework

config LICENSE.txt node_modules optimize plugins src webpackShims

配置:

[root@elk ]# grep "^[a-z]" /usr/local/kibana-6.2.3-linux-x86_64/config/kibana.yml

server.port: 5601

server.host: "192.168.110.200"

server.name: "elk"

elasticsearch.url: "http://localhost:9200"

kibana.index: ".kibana"

启动kibana

[root@elk kibana-6.2.3-linux-x86_64]# cd /usr/local/kibana-6.2.3-linux-x86_64/

[root@elk kibana-6.2.3-linux-x86_64]# nohup bin/kibana &

[1] 12381

[root@elk kibana-6.2.3-linux-x86_64]# netstat -nlpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1041/sshd

tcp 0 0 192.168.110.200:5601 0.0.0.0:* LISTEN 12381/bin/../node/b

|

|

++++++++++++++++++++++++++++++++++++++++++++++++++

安装配置logstash

[root@elk elk]# tar -xvf /tmp/elk/logstash-6.2.3.tar.gz -C /usr/local/

[root@elk elk]# ls /usr/local/logstash-6.2.3/

bin CONTRIBUTORS Gemfile lib logstash-core modules tools

config data Gemfile.lock LICENSE logstash-core-plugin-api NOTICE.TXT vendor

[root@elk elk]#

配置日志格式

[root@elk elk]# cd /usr/local/logstash-6.2.3/

[root@elk logstash-6.2.3]# vi vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/grok-patterns

# Nginx log

WZ ([^ ]*)

NGINXACCESS %{IP:remote_ip} \- \- \[%{HTTPDATE:timestamp}\] "%{WORD:method} %{WZ:request} HTTP/%{NUMBER:httpversion}" %{NUMBER:status} %{NUMBER:bytes} %{QS:referer} %{QS:agent} %{QS:xforward}

建立默认配置文件并配置

[root@localhost logstash-6.2.3]# vim default.conf

input {

beats {

port => "5044"

}

}

#数据过滤

filter {

grok {

match => {"message" => "%{NGINXACCESS}"}

}

geoip {

#Nginx 客户端 IP

source => "192.168.110.99"

}

}

#输出配置为本机的9200端口,这是ElasticSerach服务器的监听端口

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

}

}

启动logstash

[root@localhost logstash-6.2.3]# nohup bin/logstash -f default.conf &

[1] 8602

[root@localhost logstash-6.2.3]# tailf nohup.out

[root@localhost logstash-6.2.3]# netstat -pant | grep 5044

tcp6 0 0 :::5044 :::* LISTEN 8602/java

========================================================

再需要被收集日志的服务器上(nginx)安装部署fileBeat收集日志

[root@web-1 tmp]# tar -xvf /tmp/filebeat-6.2.3-linux-x86_64.tar.gz -C /usr/local/

[root@web-1 local]# cd filebeat-6.2.3-linux-x86_64/

[root@web-1 filebeat-6.2.3-linux-x86_64]# ls

fields.yml filebeat.reference.yml kibana module NOTICE.txt

filebeat filebeat.yml LICENSE.txt modules.d README.md

配置filebeat

[root@web-1 ~]# grep -vE "#|^$" /usr/local/filebeat-6.2.3-linux-x86_64/filebeat.yml

filebeat.prospectors:

- type: log

enabled: true

paths:

- /var/log/nginx/*.log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

output.elasticsearch:

hosts: ["192.168.110.200:9200"]

#processors:

# - add_host_metadata: ~

# - add_cloud_metadata: ~

启动filebeat

[root@web-1 ~]# cd /usr/local/filebeat-6.2.3-linux-x86_64/

[root@web-1 filebeat-6.2.3-linux-x86_64]# nohup ./filebeat -e -c filebeat.yml &

查看日志

[root@web-1 ~]# tailf nohup.out

查看filebeat进程

[root@web-1 ~]# ps -ajx | grep file

3629 6301 6301 3629 pts/0 3629 Sl 0 0:00 ./filebeat -e -c filebeat.yml

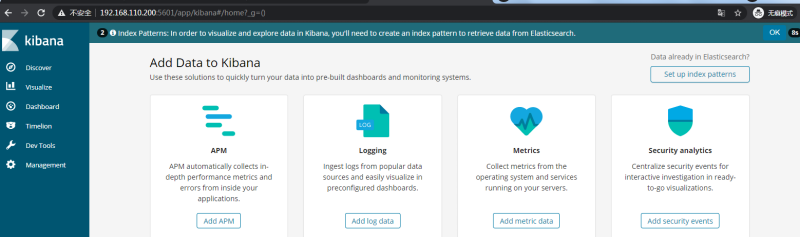

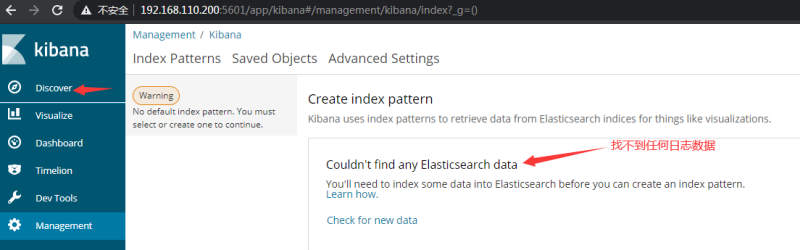

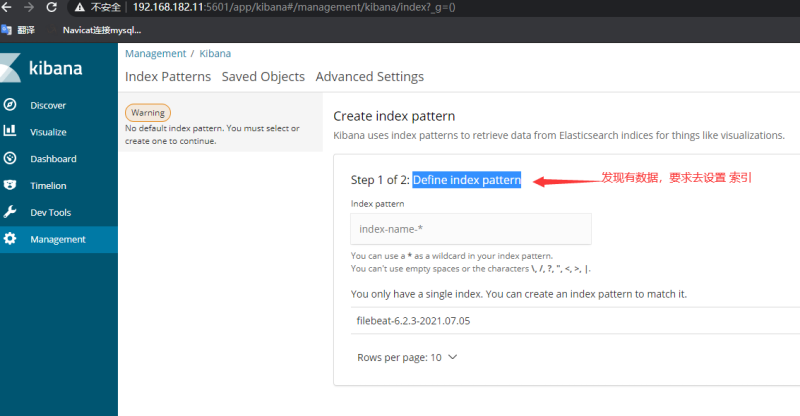

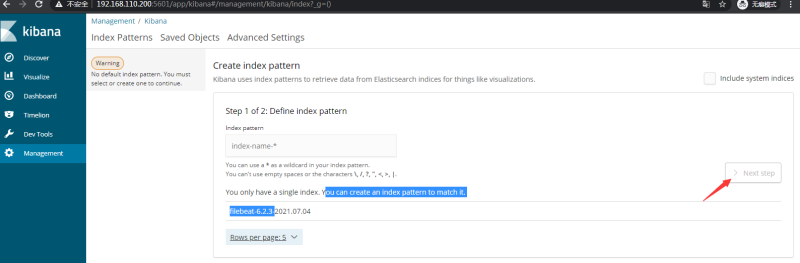

刷新kibana查看是否有数据 ?

|

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

测试:

>访问nginx服务器,产生日志数据

>

|

++++++++++++++++++++++++++++++++++++++++++++++++++++++++